TLDR: AI is one of humanity’s greatest technological achievements and is already a game-changer in how we think about and execute workplace learning strategies. But, as with any new technology, it’s not all immediately or exclusively positive news.

Some takeaways:

- AI is a powerful personalization tool that can cater to learners’ individual patterns of behavior and level of knowledge acquired.

- True personalization of learning can be achieved if the AI has access to the right kind of data in the right amount.

- Data privacy might be a concern; bias in the algorithms’ interpretation of data is another possible one.

- For many organizations, true personalization with AI might not be achievable or pertinent at this point.

- True AI is still a bit expensive, but rule-based engines are the next best thing that’s been around for a while and offer a decent level of personalization.

- You can find the best solution for your organization with the help of a thorough initial analysis; you’ll likely discover that you need to improve other areas (such as measuring work performance) before you dive into learning.

- AI readiness is a thing – some companies just aren’t there yet, and investing in powerful AI won’t make a significant difference in goals or personalization levels achieved unless other aspects in the company are addressed first (like the data privacy talk, which impacts all employees).

- Always ask questions to know what kind of technology you are paying for and what it can do.

- AI truly is amazing, but organizations will reap benefits at different speeds as they become adopters.

- The robots aren’t taking our jobs.

Defining the terms

Artificial Intelligence is an umbrella term for the development of computer systems that can perform tasks that typically require human intelligence, such as learning, reasoning, problem-solving, perception, and language understanding. It encompasses a range of technologies, including machine learning (ML), natural language processing (NLP), robotics, and computer vision, that aim to create intelligent machines capable of performing complex tasks autonomously, with minimal human intervention. AI creates systems that can learn from experience, adapt to new situations, and make data-based decisions to improve human productivity and quality of life.

Rule-based automation is not generally considered a form of AI, although it may share some similarities with certain AI techniques. Rule-based automation involves creating predefined rules or instructions that dictate a system’s behavior under specific conditions.

In this article, I will be using “AI” or “true AI” in the sense of the first paragraph above, using ML, NLP, and other technologies to personalize the learning experience.

Let’s first look at the benefits of AI in L&D

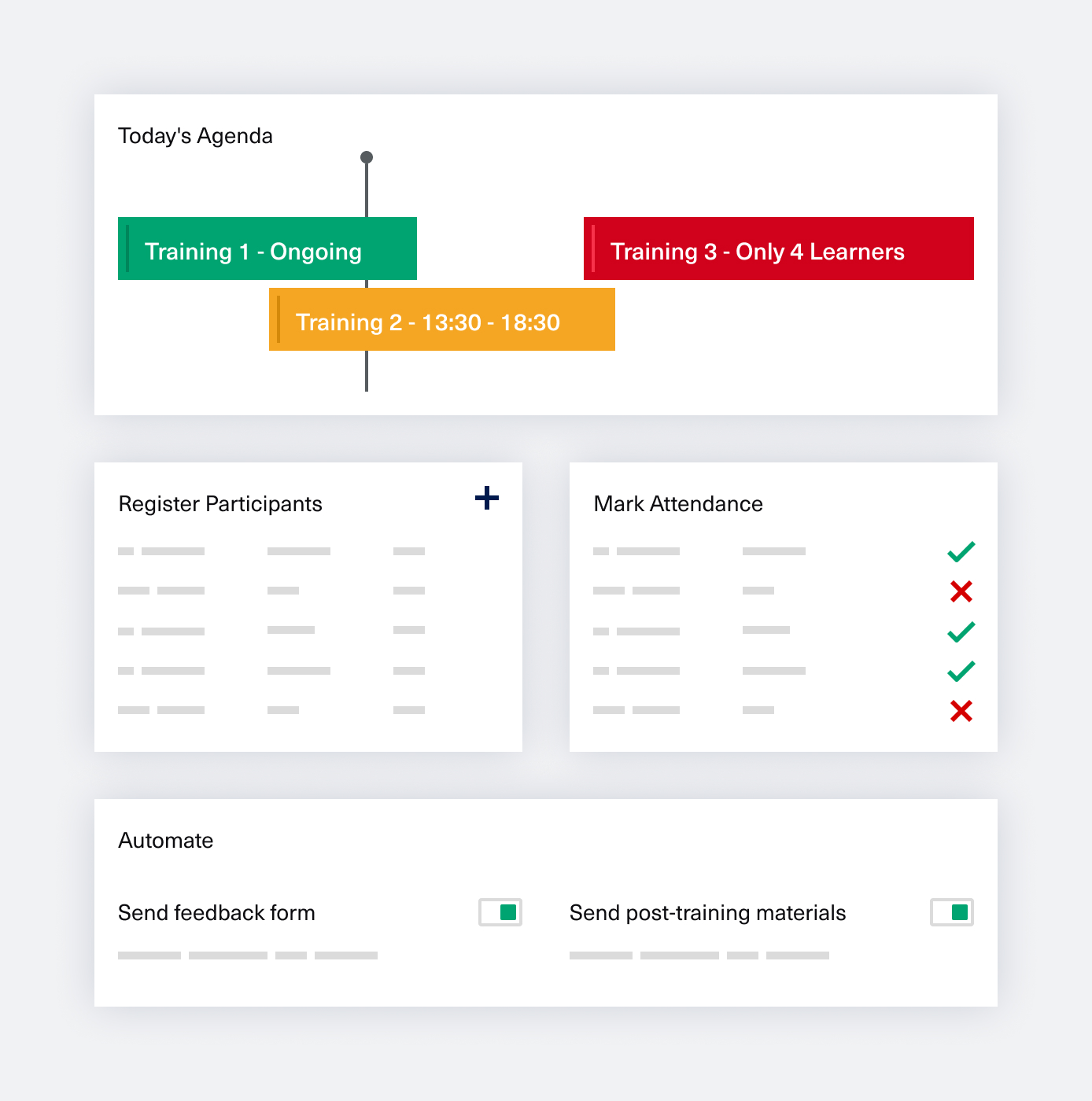

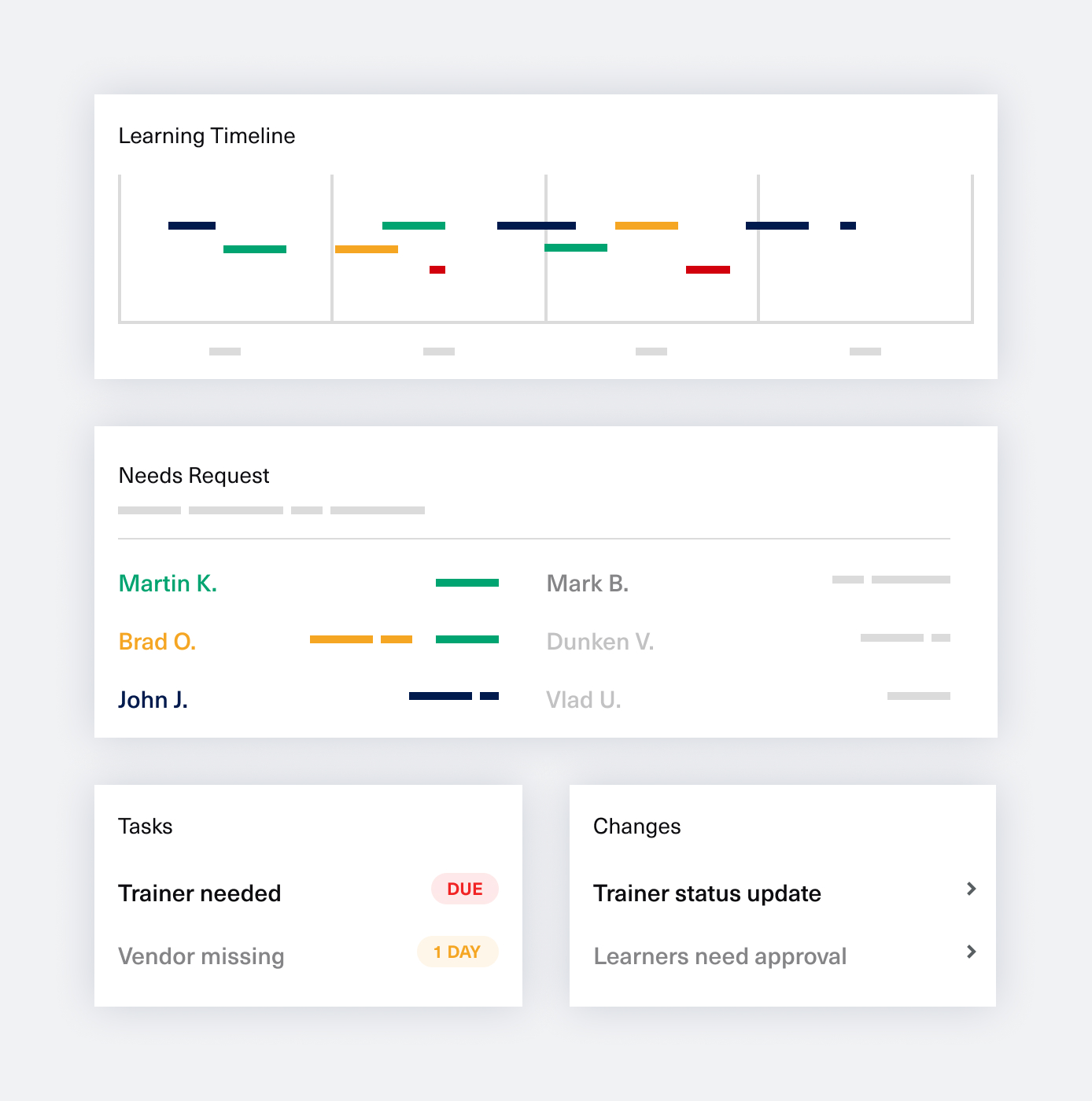

One of the primary benefits is the ability to personalize learning. Using AI algorithms, the learning offer can adapt to individual learners’ needs, considering their requirements, preferences, and skill levels, alongside the company’s people development goals and strategic business objectives. This approach ensures that each employee receives a tailored learning experience that maximizes their potential, aligning with the company’s growth strategy.

AI can also help companies analyze data to identify skill gaps and areas for improvement. For example, by analyzing employees’ performance data, AI algorithms can identify the specific skills that need improvement and create personalized learning paths to address those gaps. This approach ensures that employees receive the training they need to perform in their roles effectively. Of course, this analysis depends strongly on your organization’s ability to capture employee performance data. If you’re considering AI for improving employee skill development, specifically to increase performance, I would suggest first looking at understanding IF and HOW you can extract this performance data first.

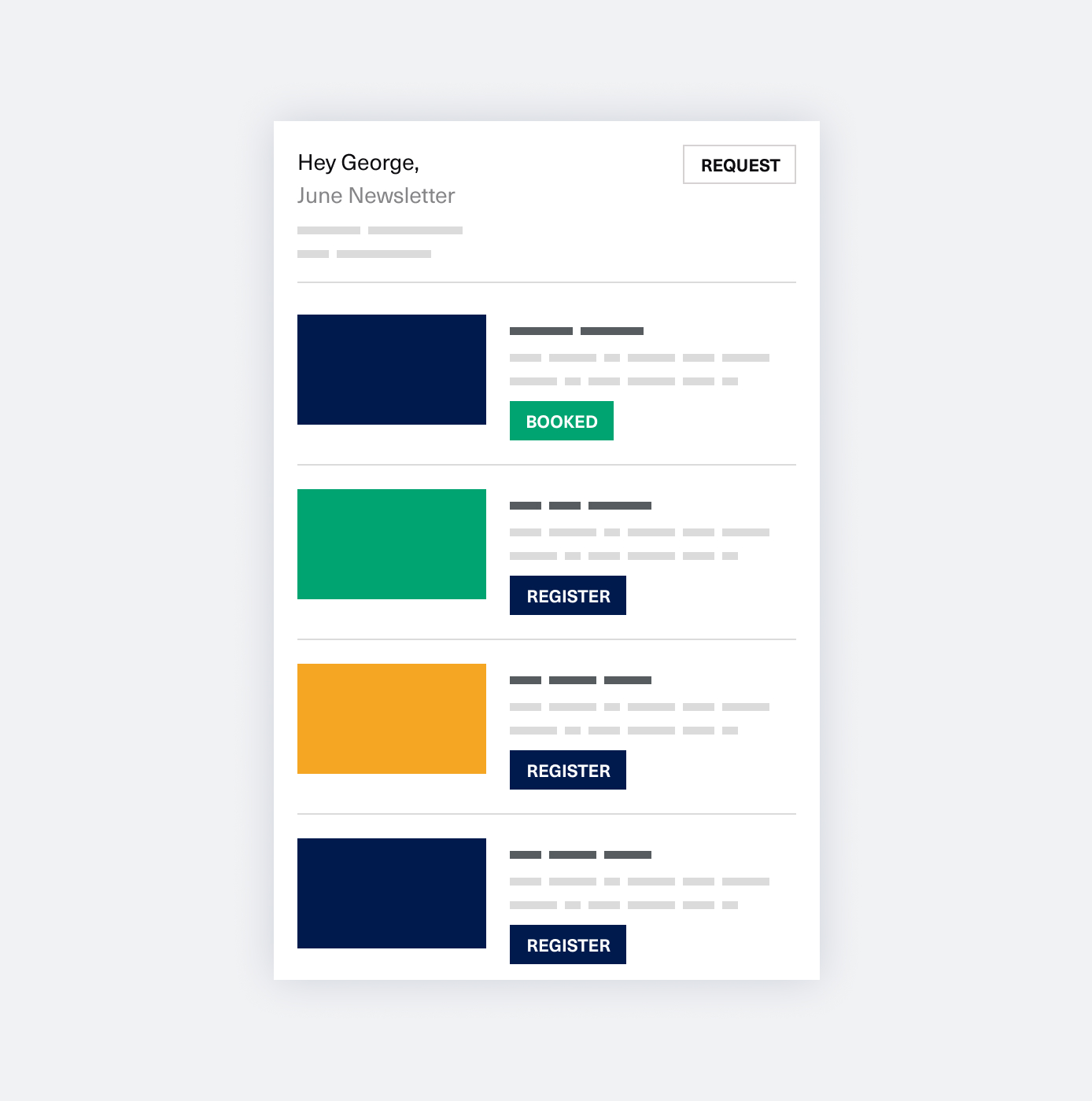

Another way AI is transforming L&D is through chatbots. They can provide instant assistance to employees when they have questions or need help with a particular task, especially if there’s a good connection made with the company’s knowledge base. This approach ensures that employees receive immediate support, which can improve their productivity and confidence. Similarly to the point above, you should ensure that your company’s knowledge base is up-to-date, consistent, and readily usable as a training resource for the chatbot’s algorithm. For example, let’s say you work for a company whose core business is creating a software product. If there isn’t sufficient and accurate documentation about the product, especially edge cases, and thorough FAQs, the chatbot won’t have anything to extract answers from to give to the employees working in support or sales.

In addition to personalized learning and chatbots, AI is also improving the quality of learning materials. AI algorithms can analyze content and provide feedback to instructors on how to improve their courses. This approach ensures the content is engaging, relevant, and effective while keeping up with the times, technological advancement, and other relevant information that plays a role in creating and maintaining learning materials.

Some other benefits of AI in L&D are automated content creation and curation, with the help of Large Language Models (LLMs) and image/video generation technologies, time and cost efficiency gains from offloading the more menial aspects of the L&D work, enhanced accessibility and flexibility of the content, and integration with existing systems and technologies for seamless learning experiences.

As you can probably already tell, it’s hard to list a benefit without immediately adding a word of caution or advice on how to take advantage of it best. I think the point I’m trying to make is that, for most organizations, integrating AI doesn’t happen in one stage or within a very short time frame. There is a discussion to be had about the company’s AI readiness, which has a lot to do with creating or cleaning up data sources, thinking about integrations, and defining the right success metrics expected from this implementation. I will likely write a specific piece on AI readiness separately, as it is a topic worth exploring in more depth.

What are some of the downsides or difficulties of integrating AI into L&D?

Some potential challenges need to be considered when using AI in L&D. Any organization planning to integrate AI should consider these and, ideally, prepare in advance for them.

Bias: AI algorithms can sometimes be biased due to the data used to train them. It’s no secret that large amounts of data have their particular bias pattern built in; we’ve seen this with AI-based face recognition, where a darker complexion is at a disadvantage, or with hiring discrimination, where a gender bias was highlighted, and other examples are a browser search away. This can result in the reinforcement of stereotypes or the exclusion of certain groups of people. For example, if an AI algorithm is trained on data from a specific demographic, it may not be effective for people from other cultural backgrounds. In this particular case, there must be a strategy that actively compensates for the bias – which opens up talks about cultural diversity in the learning content, approach, and communication.

Lack of Human Interaction: Think back to the last time you had an issue and had to talk to a chatbot. How did it feel? Was your problem fully resolved without the need for a human to step in? AI can sometimes be perceived as impersonal, and there is a risk that it could replace human interaction entirely (in some specific use cases). This could lead to a lack of engagement and motivation among learners, as they may not feel connected to the learning experience.

Just put yourself in the shoes of a learner, trying to understand a particular process or tool, interacting exclusively with a chatbot. At some point, I believe frustration takes over, and you will need to interact with someone who can give you answers more fluidly based on your complexity of thinking and of asking questions, or the scenarios you come up with. You might have already experienced this if you tried out ChatGPT and wanted to get to the bottom of a complex question or problem – there’s only so much patience one can have in rephrasing prompts to give to an AI.

Technical Issues: AI systems can be complex, and there is always the risk of technical issues arising, such as software glitches or system crashes. This could disrupt the learning experience and cause frustration for learners. Of course, this is rather marginal compared to other problems. Still, this issue typically impacts many learners simultaneously, which can influence day-to-day business negatively – for example, in a Customer Support setting.

Privacy Concerns: The use of AI in L&D requires the collection and analysis of employee data, which can raise privacy concerns. Organizations must ensure that learners’ personal data is protected and that they are transparent about how the data is being used. Consent from employees is essential, as people have a right to know what kind of systems are using what kind of data to personalize the learning experience. This is typically a rather dividing conversation, and companies tend to sit strongly on one side or the other – with either strict data protection policies in place (thus less effective AI models) or complete transparency (which validly makes employees feel watched). The absolute worst situation is a company secretly collecting employee data for “the right reasons”; unfortunately, there are some out there.

Cost: Implementing AI technology can be costly, especially for smaller organizations and compared to the final perceived ROI. The cost of developing, implementing, and maintaining an AI system can be a significant investment, which may not be feasible for some organizations. These costs derive from infrastructure and the prior effort put into such systems to bring them to a point where they are actually usable. AI tech costs are bound to decrease over time as more and more data enters the various models. But this means that early adopters are paying for amortization that laggards will ultimately benefit from. It’s similar to why new drugs are expensive until the patents expire and they become widely available, as cheap alternatives take over the market.

Some other potential downsides include AI’s limited ability to handle complex or creative tasks – such as generating content for various roles, scenarios, edge cases, or complex learning experiences – and a high initial cost of implementation (AI requires a lot of data preparation on the organization’s part if it is to offer relevant recommendations early on). Other downsides might be a need for specialized skills and expertise to operate and maintain AI systems (aka hiring data scientists, if you weren’t planning on it), and possible negative effects on mental health if learners feel overwhelmed or disconnected from the learning process.

Overall, AI has the potential to revolutionize L&D, but it’s crucial to be aware of these challenges and take steps to address them proactively. Organizations must carefully evaluate their needs and capabilities before implementing AI in their L&D programs and technology stack.

AI vs. rule-based engines in L&D – what is this about?

Differences between AI and rule-based engines

Let’s first clarify what we’re talking about and why it’s important to know the difference. Simply put, rule-based engines need initial (and occasional) human input (the “rules”), whereas algorithms are just fed a data set and learn from it and subsequent user interactions (aka learners learning) and keep adjusting. There are some rules here, too (the ones based on which the algorithm learns), but let’s keep it simple for now.

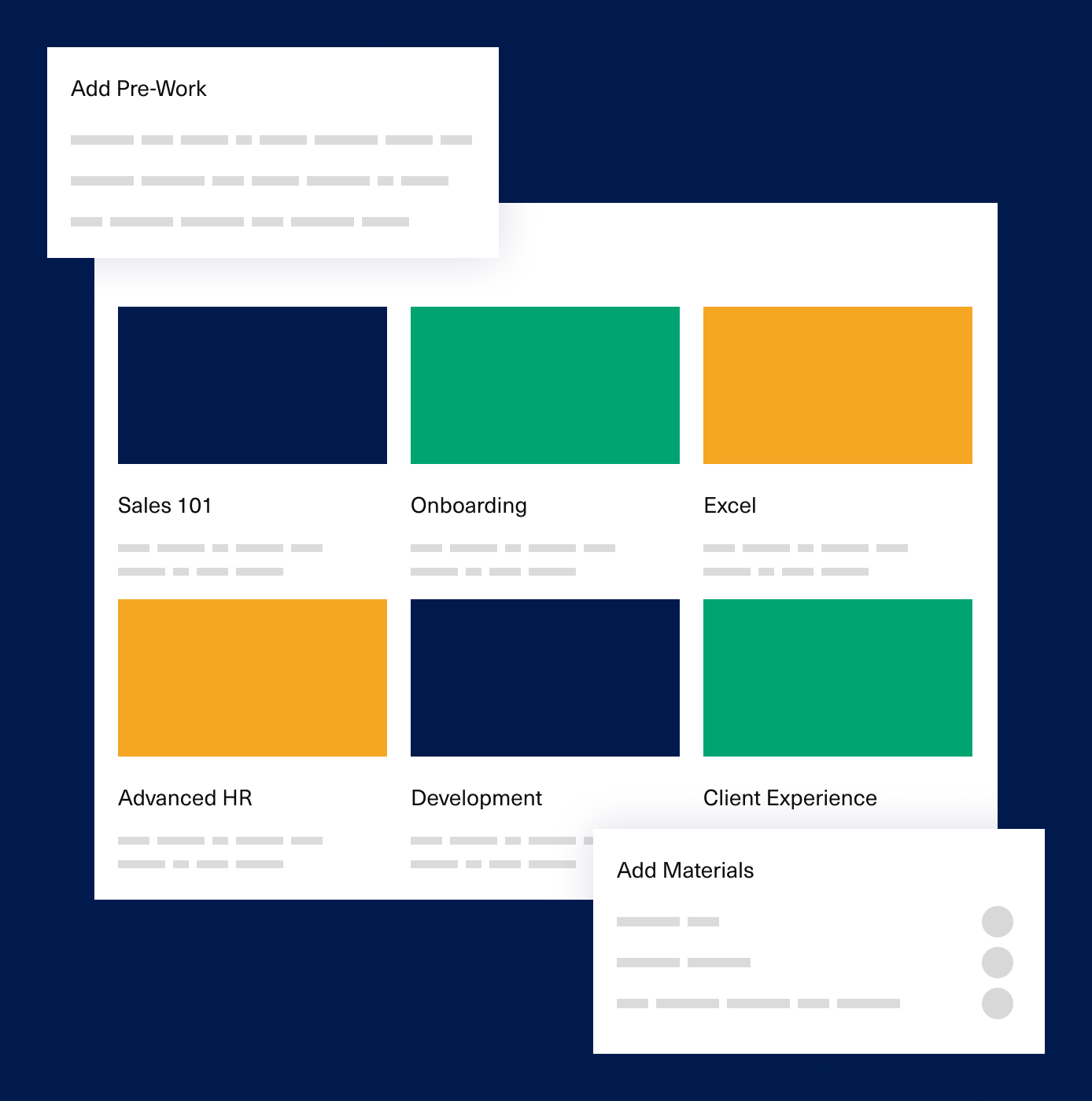

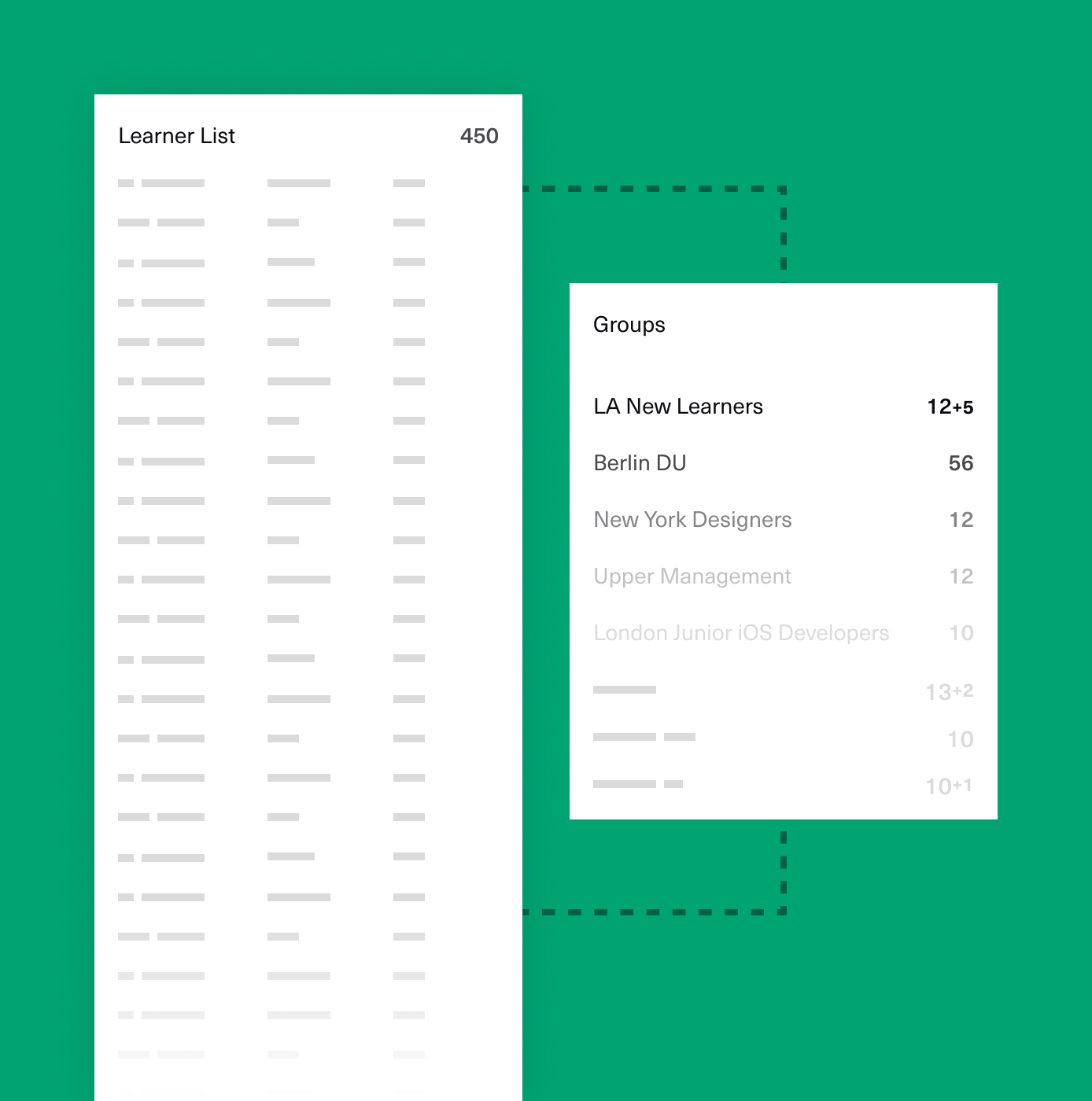

In the context of L&D technology, a rule-based engine is a type of software system that uses a set of pre-defined rules or conditions to personalize and automate the learning recommendations for each individual user. These rules can be based on the user’s skill level, learning preferences, job role, geographical location, or performance data.

For example, a rule-based engine in an online learning platform may use the user’s previous course history, quiz scores, and other data to suggest the most appropriate next course or learning activity for them based on “if-then” statements (“if employee completes X course, give them access to Y and Z courses” and so on). It may also adjust the difficulty level of the content or provide personalized feedback based on the user’s performance (“if employee completes at least three level A pieces of content, give them access to level B content from now on”).

A rule-based engine in L&D technology aims to provide a more efficient and effective learning experience for each user while allowing for scalability and automation in learning content delivery. By using pre-defined rules and conditions, these engines can adapt and personalize the learning experience in real time, helping learners to achieve their goals more quickly and effectively.

You may notice here a keyword – personalization. Yes, rule-based engines do offer personalization. Quite often, it’s a sufficient level of personalization to help you reach your strategic L&D goals.

By comparison, AI specifically refers to using advanced machine learning algorithms to personalize and improve the learning experience for each user even further. AI differs from rule-based engines because it can learn from and adapt to new data and situations rather than relying solely on pre-defined rules and conditions.

To help explain this in more depth, here’s a specific example to understand the level of personalization that AI could achieve.

Let’s say you use an AI-powered learning platform to study a new language. The platform uses natural language processing (NLP) algorithms to analyze your performance and provide personalized feedback and content recommendations.

As you work through the platform, the AI algorithms continuously gather data on your learning patterns, including your strengths, weaknesses, and areas where you struggle. Over time, AI algorithms can use this data to identify patterns and predict your future performance.

For example, if the AI algorithms notice that you struggle with verb conjugation in the past tense, they might recommend specific exercises or resources to help you improve that area. If you consistently perform well on vocabulary quizzes, the AI might adjust the difficulty level of future quizzes to challenge you appropriately. By comparison, a rule-based engine will check a simple condition (“Has the learner completed X quiz with a score above 80? If yes, move to the next lesson, if no, retake the lesson until the score is above 80.” and so on).

The more data the AI gathers and analyzes, the more accurately it can personalize the experience for each learner. As a result, you may find that the platform becomes more effective and engaging over time as it learns more about your unique learning needs and skill level.

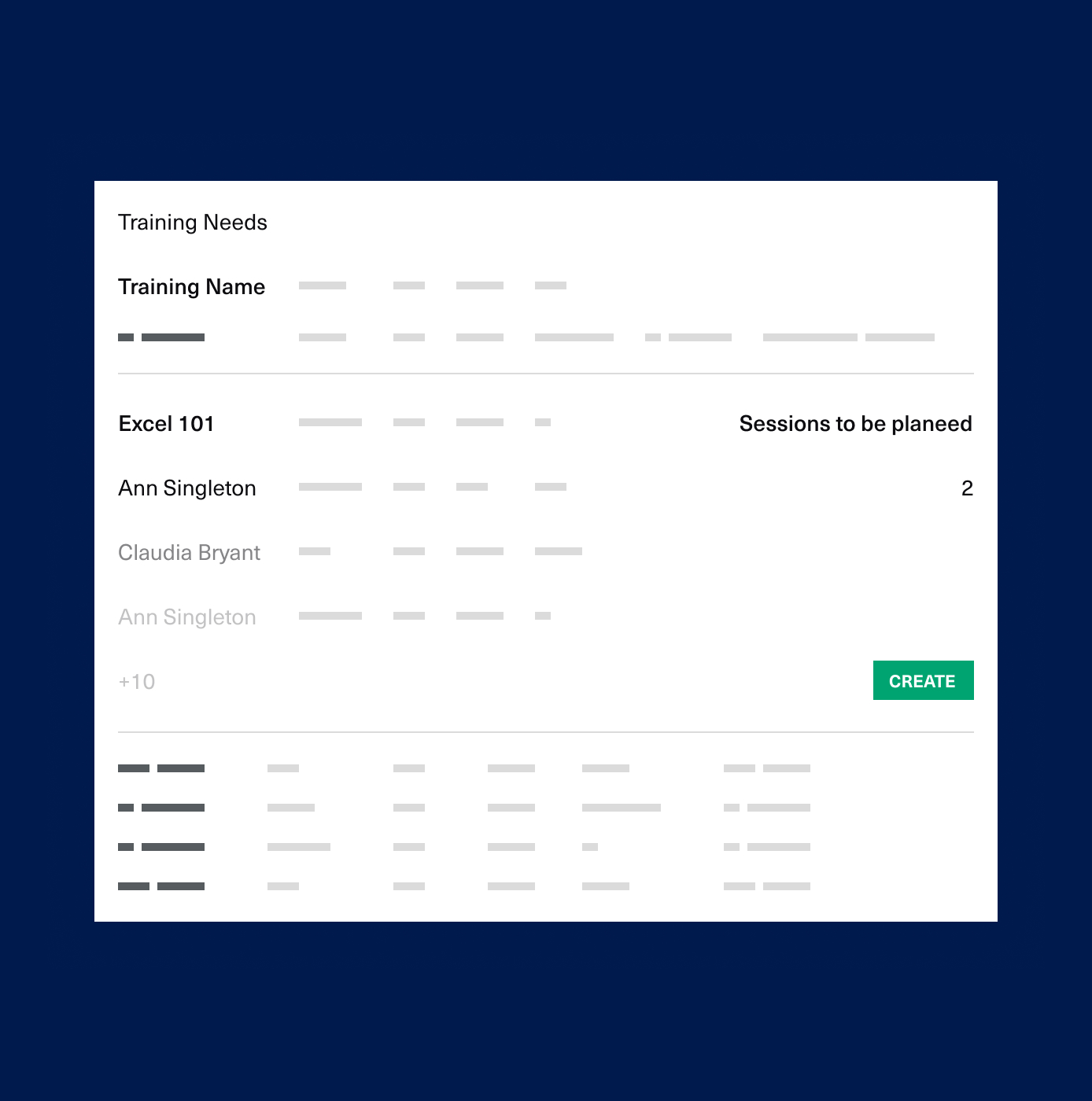

In my opinion, the relevant differentiator for your organization between rule-based engines and AI boils down to this: while you are learning, if there are identifiable patterns in your particular learning behavior that AI can learn from, if the way the learner interacts with the content allows this data to be captured, and if your organization allows capturing these patterns, AI will indeed pick up on that and adapt the learning experience. However, if the learning is less driven by each individual’s behavior and more by the organization’s needs (“all employees in XYZ department need to take ABC courses”), or if the content itself is not very interactive (video-based, text-based, fewer quizzes, little input from learner in the form of free text or speech), then AI doesn’t do much for your L&D strategy.

Another point is (and this might be painful to read, but still quite true and frequent): if your organization is the type where employees don’t spend too much time learning, either because the workload is high, the organizational culture or management practices aren’t conducive to frequent voluntary learning, or the content offer is not that varied, AI is overkill and could backfire. If the algorithm doesn’t have enough data to learn from or the learning interactions are infrequent and too broad or varied, the recommendations will be poor, thus making it even less appealing for learners to access your learning offer.

Having a solid starting data set to train the algorithm is very important. Otherwise – and this is a BIG issue – you will be doing what is called a “cold start”. If you have too little or no data at all to feed the algorithm, the AI will simply offer completely random initial recommendations to users, relying on trial and error (aka users liking/disliking each recommendation) for a while until it self-calibrates. This makes for a pretty awkward first couple of months, where you risk pushing learners away – it ends up being like a bad Netflix or YouTube browsing experience where, one hour later, you still couldn’t find anything worth watching, and the sneaky, nasty thought gets stuck in your head that this platform is just bad and useless, so you abandon it. Our patience is limited, especially as busy workers; we don’t have the time to train the algorithm.

In case you do plan on using AI with a cold start, be careful with how you announce the big launch to your colleagues. You might be excited and oversell this brand-new, promising platform. If it turns out to be a dud, this is a difficult place of low credibility to recover from, especially alongside the typical perception that learning is a burden or that L&D is a cost center.

From an implementation standpoint, the big difference comes from this: rule-based engines rely on someone creating a structured format – “if-then” statements and information that is categorized – so a somewhat bigger upfront effort. But don’t let this scare you; it’s easier than it sounds. Whereas AI just receives a giant set of data and learns from the data and, later on, from live user interaction. AI requires less upfront work, but it does require that *some* data exists.

Summarising and re-organizing the info a little bit, here are some (more) differences between the two:

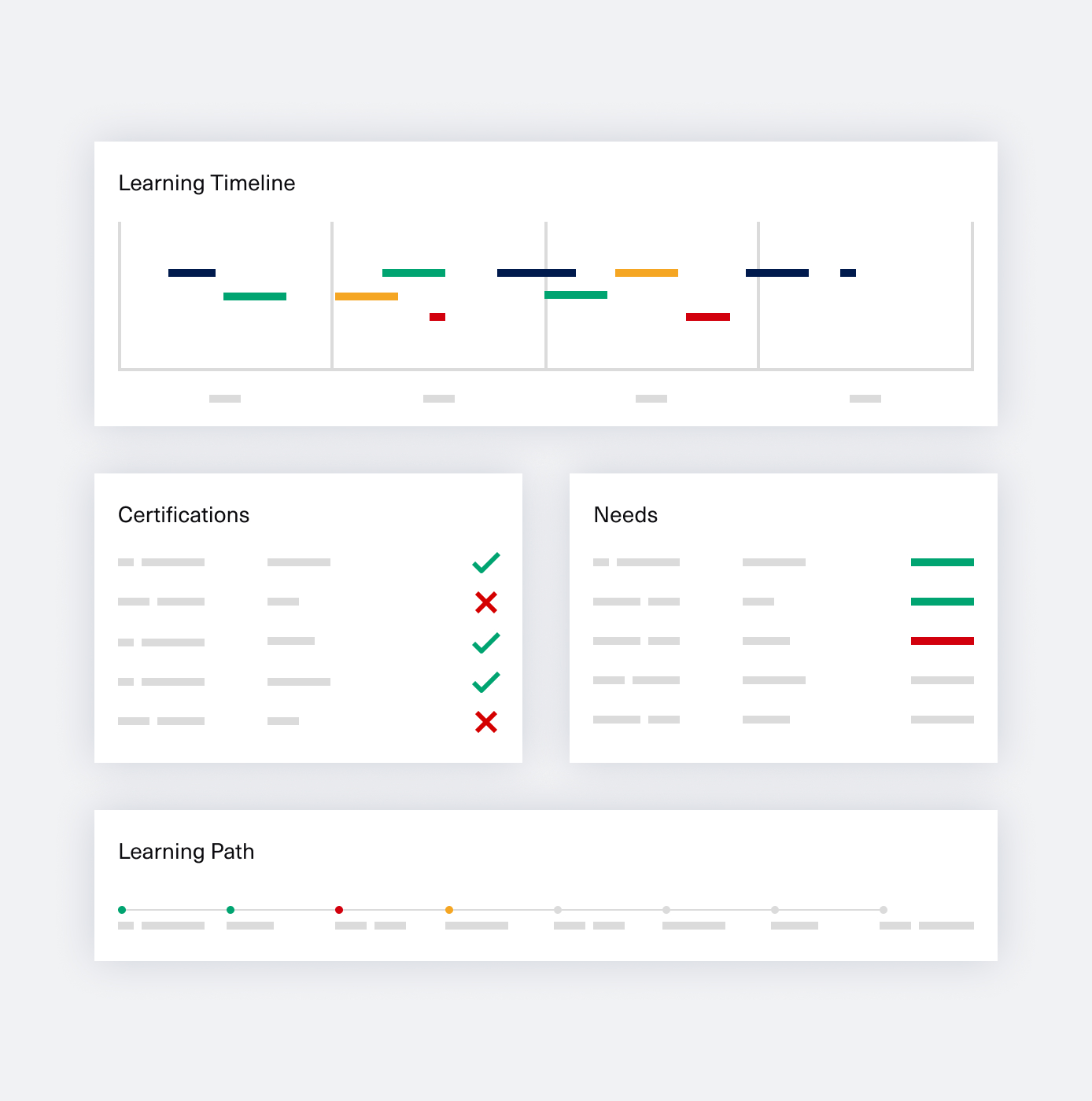

Approach: True AI, relying mainly on ML and NLP, is an approach where the system learns from data and adapts its behavior without being explicitly programmed. In contrast, rule-based engines use predefined rules or decision trees to guide the system’s behavior.

Personalization: True AI can personalize the learning experience by adapting to each learner’s specific and detailed needs and preferences, whereas rule-based engines can deliver the same content to every learner *group* (you can think of learner grouping as based on demographic criteria, but also as based on completion criteria, similar to the example of “if learner finishes A above 80%, give them access to B, if not, repeat until result is above 80%”).

Flexibility: True AI is more flexible than rule-based engines, as it can learn and adapt to new situations and data over time. Rule-based engines, on the other hand, require manual updates to the rules or decision trees to adapt to new situations.

Complexity: True AI is more complex than rule-based engines, involving sophisticated algorithms and statistical models to analyze and learn from data. Rule-based engines are typically simpler and rely on predefined rules or decision trees. Thus, the processing power and cost of AI are higher than those of rule-based L&D tech.

Accuracy: True AI can make more accurate predictions about learner outcomes and provide more personalized feedback than rule-based engines. It can learn and adapt to each learner’s unique characteristics (IF it has the right access to data).

How do I know what I’m paying for?

Moving on, let’s dive into why I am telling you this. It does sometimes happen that learning tech vendors claim to be using true AI but, in fact, are using rule-based engines in their L&D tool without you knowing it. It’s not something incredibly obvious to the naked eye: you will still see interfaces with “Recommended just for you” or “Because you watched X, here’s Y and Z”. As mentioned before, both mechanisms offer recommendations to learners – and they’re both legitimate recommendations if we’re honest. But the degree of personalization is the key difference here.

To check what kind of approach a vendor is using, you can take the following steps:

Ask for more information: Ask the vendor for more details on how their system works and uses AI. Specifically, ask for information on the type of AI algorithms they use, the data they train the system on, and how it adapts to each learner.

Look for information in the tool’s documentation: Check the tool’s documentation, website, or user guide for information on how the tool works. The tool’s creators may explain whether they use AI, rule-based engines, or a combination of both.

Request a demo: Ask the vendor to demo their system and observe how it operates. Look for signs of personalization and adaptability, such as customized learning paths, personalized feedback, or adaptive assessments.

Observe the tool’s behavior: Watch how the tool operates when delivering content to learners. If the tool personalizes the learning experience by adapting to each learner’s needs and preferences, it may use AI. On the other hand, if the tool delivers the same content to similar learners (based on that grouping described earlier), it may be using rule-based engines.

Check for transparency: Look for transparency from the vendor in terms of how they are using AI in their system. They should be able to explain the role of AI in their system clearly and provide examples of how it is used to personalize the learning experience.

Consider the complexity of the problem: If the problem the system is solving is relatively simple, it may not require true AI. Rule-based engines may be sufficient in this case.

Consider the tool’s capabilities: AI-powered L&D tools are typically more advanced than rule-based tools, so consider the tool’s capabilities. For example, AI-powered tools may be able to provide more personalized feedback, adapt to each learner’s pace, and make more accurate predictions about learner outcomes.

Verify the vendor’s claims: Check the vendor’s claims about their use of AI against industry standards and best practices. Look for independent research or third-party assessments of their system to verify their claims.

Why does this matter?

As a vendor in the L&D space, we spend time looking at competitors and analyzing their moves, technological advancement, and marketing approach. Some claim to use AI, and some don’t, and this is important for a number of reasons.

First, is AI relevant to the problem you are trying to solve?

It is often the case that AI is just a buzzword being thrown into the conversation to seduce you into becoming a paying customer. If your business case does not lend itself well (or at all) to using AI, then you don’t need it; it’s as simple as that. You won’t be getting extra “juice” from an AI tool if, for example, the data-gathering capabilities are not there. Consider this if you’re more on the compliance side of learning, if you don’t have any immediate intention to buy or use-case for an LXP, or if you are a smaller organization or have very straightforward job roles and responsibilities defined. AI might simply be overkill.

You don’t have to jump on the bandwagon just because AI is on everyone’s lips these days. Not just because of the amount of data required to train the model on your particular organization and all the other associated potential challenges above but because you might not end up using it to its fullest extent. It’s the same as buying a fancy new car to be stuck in traffic for hours to get to the office when you could have easily taken a bike or public transport and arrived sooner and cheaper. It’s ok to buy just what you need and not more if the platform does the job it’s supposed to do well, it’s quick to onboard and gives you accurate, timely data on which you can take action.

Second, is the typically increased cost associated with an AI-powered tool justified?

In some cases, it might not be. You can expect a vendor using AI to charge you much more than one using standard automation practices like rule-based engines because computing power and server space are expensive. If cost is of high importance to you, then this might be something you want to look into and clarify before you decide to buy. If your ROI from AI vs. rule-based tech is similar, and the goal you are trying to reach is legitimately achievable without AI, why pay more?

And third, is your organization truly ready to use AI?

I’m specifically referring here to the AI readiness I was mentioning toward the beginning of the article. Are there better initial use cases where your organization could invest in AI? For example, gathering the right kind of data to showcase employee performance. There’s been a long debate on measuring work output, and it’s still an ongoing hot topic. I believe that this is the essential first step that needs to be tackled before we dive into AI-powered learning tech that “improves performance”.

Another aspect is the level of detail observed when learners *experience* the organization’s learning offer. If you’re mostly offering uni-directional learning (old-school eLearning, video, text, and lots of live training), there isn’t much data for algorithms to capture. One course completion is just as good (or bad) of an indicator that someone *knows* how to do something because, really, no one actually knows – it makes no difference if you put AI or rule-based or any other method on top of that because there’s no further output than “Complete/Incomplete”. What’s there to personalize? Your content needs to be granular and interactive enough for AI to actually learn from employees while learning – in which case, an investment in better content might be more important to consider before or during the adoption of AI.

In conclusion

I realize I might be conveying a lot of skepticism or reluctance in this article. I’ve thought about why this is, and here is my conclusion: I’m not a fan of going with the trend just because everybody’s talking about it. If there are other priorities to consider before an investment in AI-driven L&D tech that could have a better initial impact AND will prove useful for an AI implementation further down the line, I strongly believe that this step-by-step approach produces better overall results. This applies, in my opinion, to any organization looking at improving its L&D ROI and business bottom line.

I will, however, try to end on a positive note, as I truly am very excited to see what happens next in learning technology and what kind of history-making changes are inevitably coming. I fully expect that schools and universities as we know them will fundamentally change how they prepare kids and young people to become part of society and the workforce market. The notion of workplace and, implicitly, workplace learning, I believe, will see some major shifts.

This, combined with the ever-increasing desire that (soon to be) working-age people have for flexibility in their job and growth opportunities, will make for a very fun couple of years ahead. Of course, this change will happen in waves. Not all companies’ organizational structures or business models lend themselves well to flexible working or increased employee autonomy, but significant changes will come, nevertheless.

And learning at and for work, and more broadly, learning for each individual’s professional advancement, are fundamentally driven and shaped by the latest technological developments, among which Artificial Intelligence takes the top position.

Personalization is increasingly becoming a key learning objective as we collectively acknowledge that each individual requires specific knowledge and support while navigating the complexities and challenges of day-to-day work.

And don’t worry; the robots aren’t even close to taking our jobs – we’re still safe.