Why are you making a change in your learning tech stack?

Let’s start with the mandatory first step of this journey: why are you changing your learning technology?

Is this a voluntary change? Maybe your current tech no longer supports your needs, and the manual workarounds are taking over your daily schedule. Perhaps the vendor is no longer investing in the platform, and you’re seeing more bugs and less support. Maybe you realized that content without data isn’t giving you sufficient insights. Whatever the reason, your tech no longer makes sense for your current learning & development context, and a change is needed.

Is it an imposed change? Does this sound familiar: “The Group is renewing the entire tech stack and buying a blanket solution that ‘has everything?” There are many possible reasons: cost-cutting, unifying the HR admin experience or capturing data from multiple business processes. Sometimes it works out, and everyone is happy. Sometimes it doesn’t. I won’t go into too many details about the imposed infrastructure change. If this new tech wasn’t your first choice (or even your third), try to push back to see if you can find a better solution. Look for something that integrates (seamlessly) with the new blanket tech or at least signal all the potential risk areas that are going to impact L&D as a result of this new technology.

Make a case for the expected increased workload and ensure that someone acknowledges and takes responsibility for it. Any wrong tool ultimately translates into lost money and opportunities for the company, which should be made clear from the beginning. Where can these losses come from? If your workload increases or gets more complicated due to this new tech, L&D has less time to do meaningful work (like needs gathering, LXD, or data analysis), which ultimately impacts the effectiveness of L&D and its potential to do good in the organization.

Regardless of what sparked the change in your L&D tech stack, here are some things to consider before, during, and after implementation.

First of all, be very intentional in the goal that you are aiming to achieve. Is this goal clear? What does the expected outcome look like? Some examples might be:

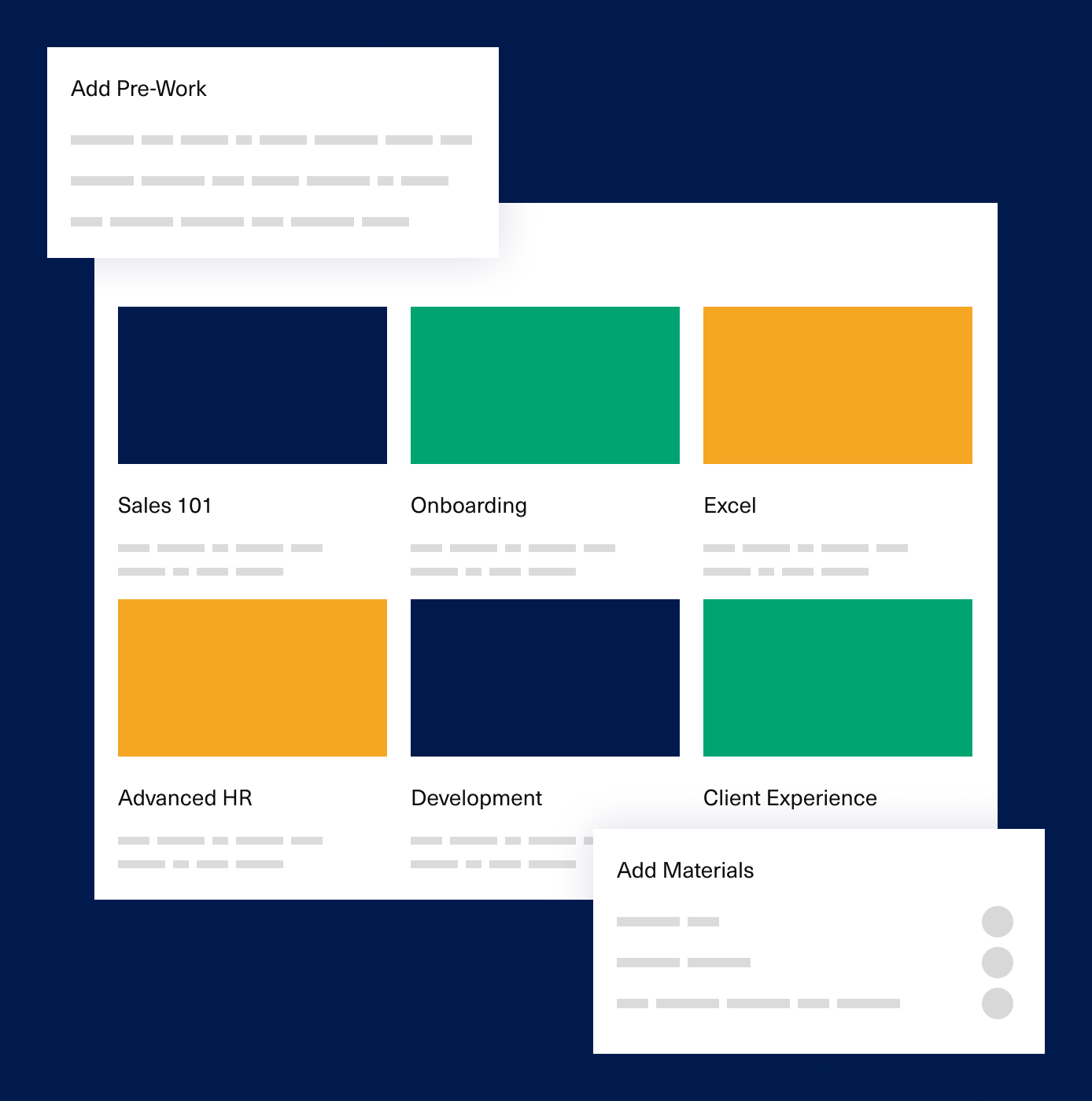

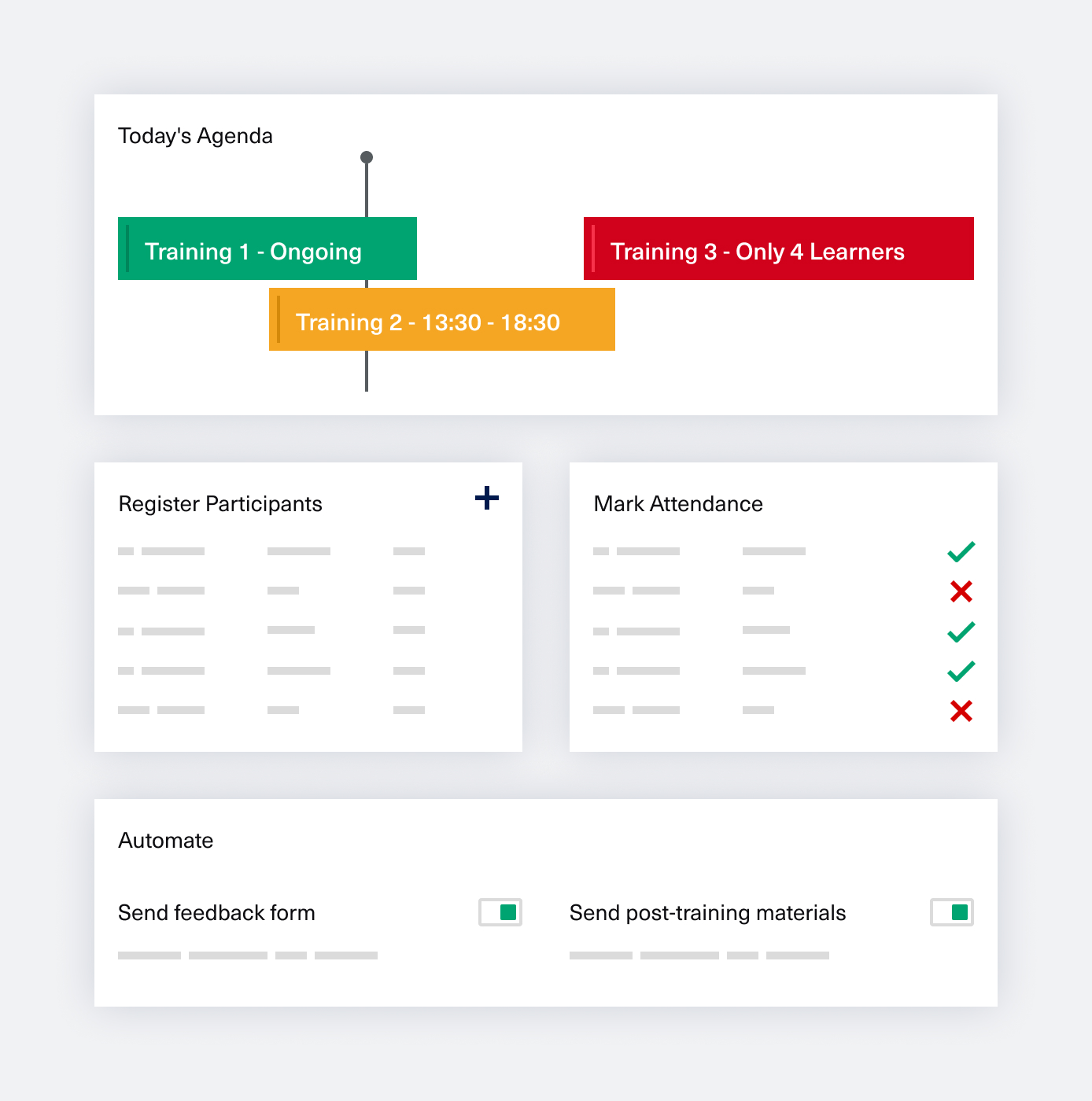

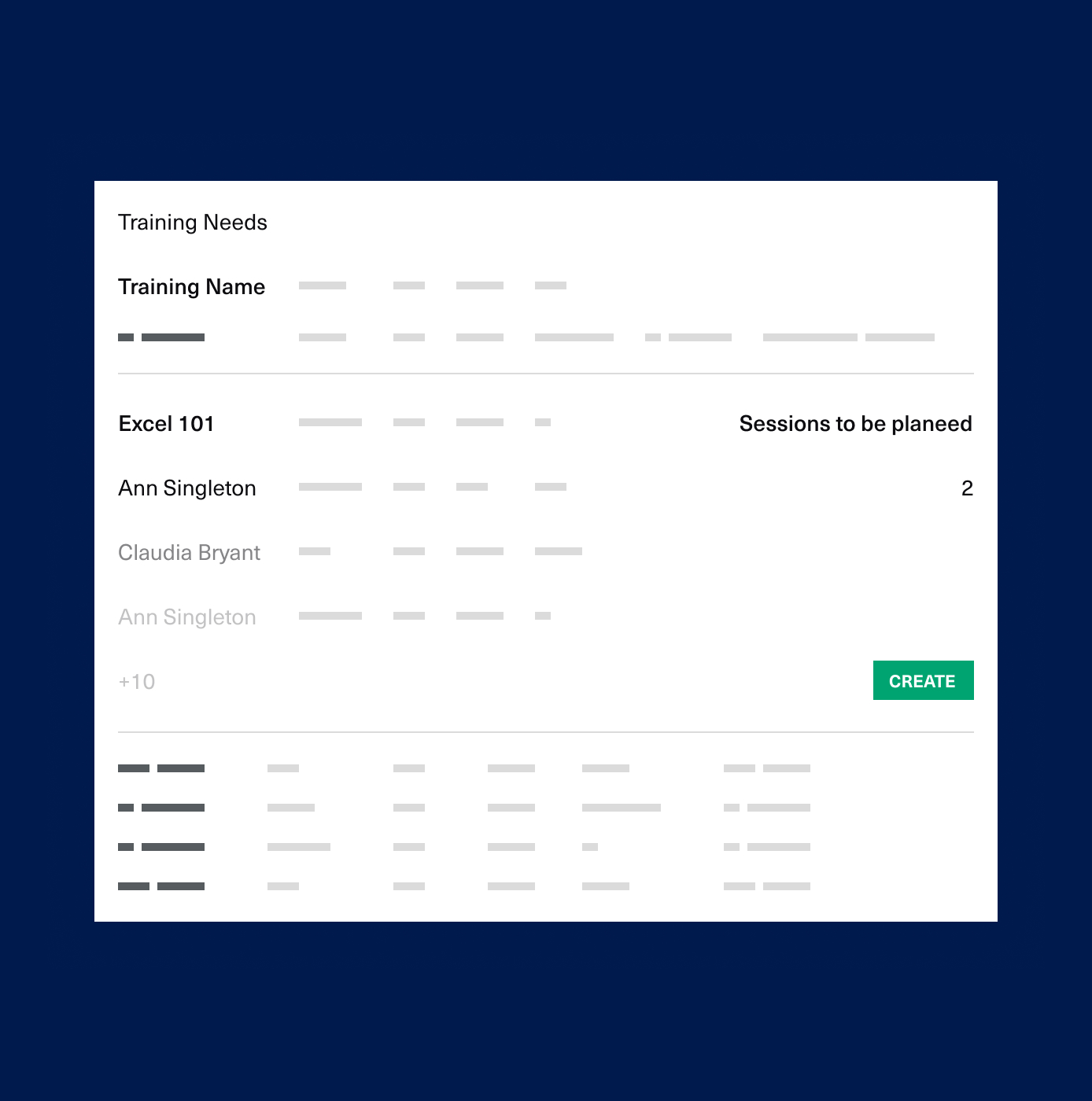

- I’m stuck doing admin work all day – emails, calendar invites, issuing certificates, handling last-minute replacements, accommodating busy trainers’ schedules, marking attendance, etc. It currently takes me 5h of work to plan and manage one training session. Goal? I want a platform that automates live training management. Measurement? I expect the effort to come down to a maximum of 1h of admin work per session.

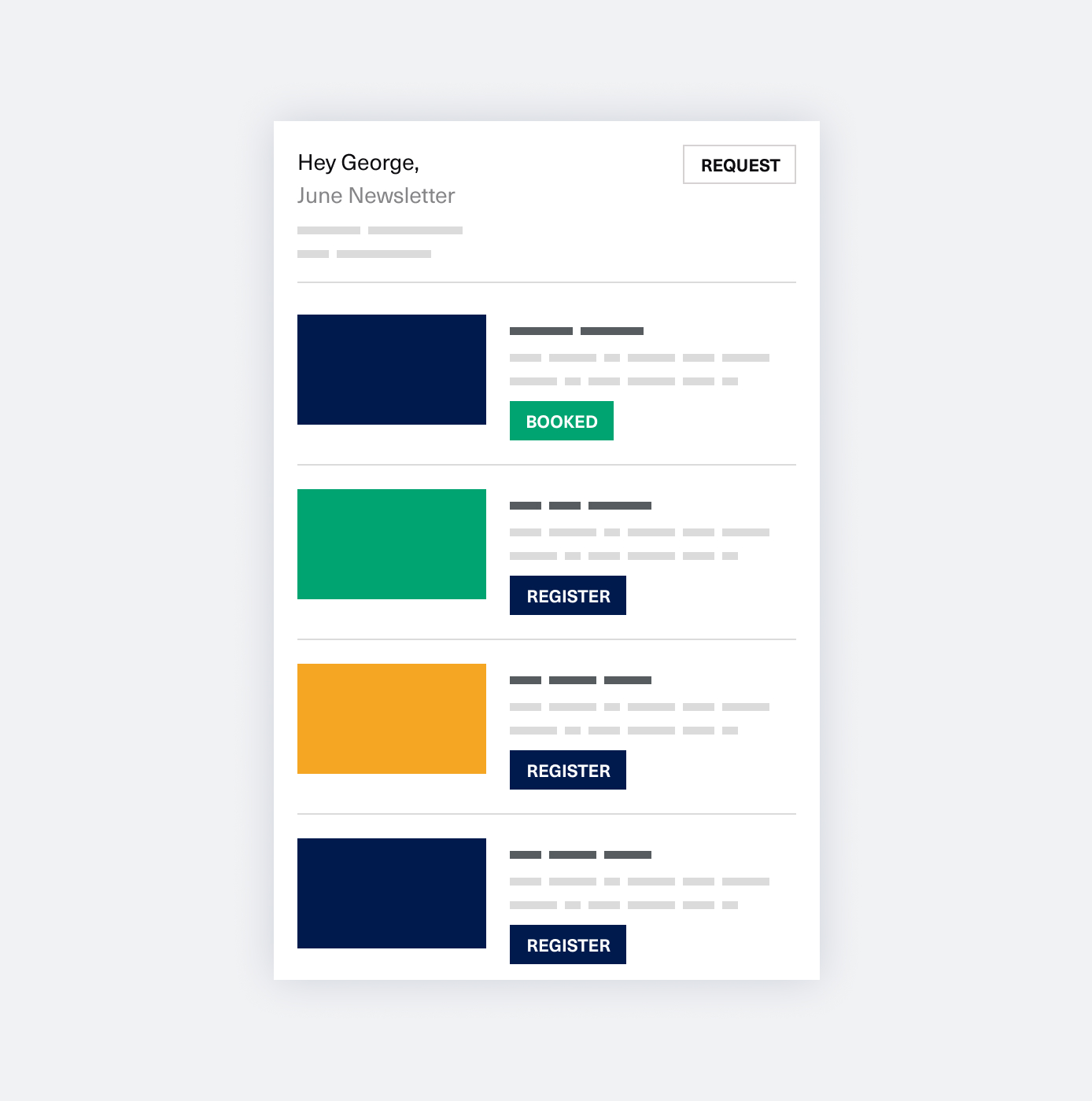

- Our colleagues constantly complain there’s nothing relevant to learn on the internal platform. The content is dusty and outdated, and learners aren’t excited or even care about the current learning offer. Employees spend very little time learning. We never meet the X-hours-per-year metric. Goal? A place where employees can access content to their liking, they have multiple options to choose from, and they can do both work-related learning as well as personal development. Measurement? I expect that navigation will be easy and intuitive, the learning offer will be varied, and there will be personalization mechanisms. I expect a 5X increase in learning hours and that the employee engagement survey highlights the new LXP specifically.

- It takes us hours upon hours to produce our monthly compliance reports. To provide any transparency over our compliance learning, I extract data from at least 3 sources. I’m drowning in Vlookups and always late with the report. Goal? Reporting will become a secondary activity, not my day’s primary focus. Measurement? It shouldn’t take me more than 30 minutes to extract a comprehensive mandatory learning report, and (if you’re feeling extra cheeky) the report should be generated automatically and sent via email to all the relevant stakeholders without me having to lift another finger.

The list can go on, but I’m sure you catch my drift. Whatever your goals are, make sure A. that you have them and B. that you have clarity on how you will know they are being met.

Next is to extract from the vendors how they will help you achieve these goals. This is the time to be persistent and clearly and repeatedly express your requirements and expectations.

When you change to new tech, common sense dictates that you should have at least everything you used to have, but that it works better/faster/smoother/more precisely. Otherwise, why are you making a change at all?

But how can you find this out before even signing the contract and locking yourself into another (at least) 2 years of a new vendor relationship?

You achieve this goal by testing the new solution in a demo trial or asking for a Pilot before committing long-term. What’s important here is that you do it with relevant data that are as close to reality as possible. If you also need some custom features built, ask for references from existing customers and get their feedback on the relationship with this vendor.

Ask for things like:

- What happens when there’s a platform error that’s impacting multiple users? Find out about the responsiveness and accuracy of support offered.

- What’s the approach in case there’s a detailed report to be extracted or a new data field that needs to be added?

- What is the dynamic during implementation? Do you feel like this is a true collaboration with a technology partner?

- What kinds of success metrics can I ask for to know that we are on track with the expected outcomes of our partnership (speed to delivery, the accuracy of data capture and measurement, the relevance of content suggestions provided, time saved through automation, etc.)

It’s unrealistic to go through such trials or Pilots with your initial pool of 12 learning tech options. Still, it would be best if you aimed to do this for your final 2 or 3 contenders. At the very worst, plan a Pilot and ask for references from that one vendor you do choose. We recently came across a company that asked us to introduce them to one of our current customers as part of their vetting process of selecting an LMS/LXP vendor. We were pleasantly surprised to see they had committed to doing the same for all their new tech vendors (not just L&D, but HR or other tech investments). We were more than happy and comfortable to put them in contact with our largest customer. Any vendor should do the same for you.

There are many reasons to make changes in your learning tech stack. Whether it’s software that no longer matches your needs, doesn’t fit the company’s strategic direction, or isn’t flexible/responsive/fast/reliable/scalable enough, you should have a specific goal in mind. You should continually assess contenders based on this goal throughout the buying process and, very importantly, during implementation. Any vendor reluctant to give you answers or beating around the bush will likely not be a promising technology partner in the long run.

What’s your relationship with IT?

Another critical factor in any tech onboarding process is to get the IT team on your side. Make an effort to find your IT champion willing to work with you throughout Procurement and Implementation and who could help even earlier, during the initial research phase.

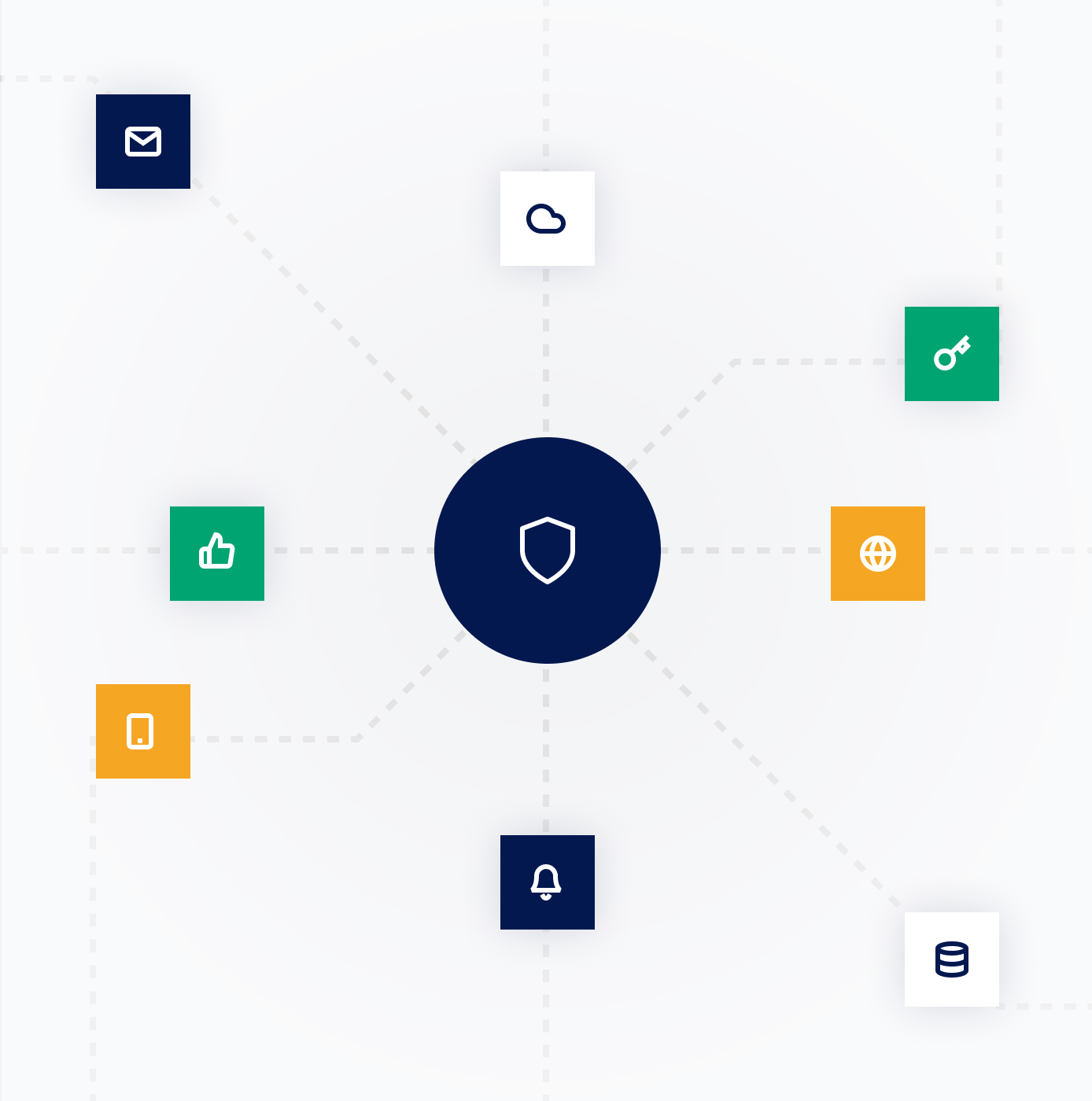

Your IT is likely interested in security implications, infrastructure costs, data privacy, platform availability, etc. How do you de-risk adopting new learning tech in the eyes of (and, hopefully, together with) IT?

Let’s say, for example, that your current LMS is on-premise, and you’re considering a cloud solution for the future. What does your IT team think about this? Is this a hard pass, or is this negotiable? Do you need to see ISO/SOC certifications? Ask for them. Does the new vendor say they’re compliant with standard XYZ? Pen-test or ask them to come up with their own pen-test reports (ideally, not from a ghost company).

Better yet, try and organize a meeting between your IT and their technical team (there should be someone technical on the vendor’s side, able to handle a convo with your IT). Attend this conversation even if you don’t expect to understand everything debated. Ask for clarification and mediate the discussion in the direction you want: do you need more certainty or clarity? Push the vendor for answers. Do you feel your IT is being overly cautious? Understand where they’re coming from and explain your objectives. Either way, make every effort to involve IT early in the conversation, as they can help you immensely or be your biggest blocker.

What about data migration, historical reports, and continuity between systems?

This step is all about data. The first thing you need to make sure of is that you have the data from the previous vendor. You own it, you’ve generated it, and there’s no question that you should receive it as part of your offboarding process. If the vendor is unresponsive or not cooperating, you can involve Legal – though hopefully, things never have to get that far.

While gathering your historical data, work with your new vendor to articulate your new data requirements. Usually, much of the conversation happens around reporting and analytics. You can (and should) have conversations like, “I want an XYZ report that looks like this, and it should take me ABC time to extract this information.” If your L&D team’s activity is very data-intensive (compliance learning, audit trails, multiple stakeholders requesting reports frequently), then make it a point in your conversations with your new vendor to address this. Hold your vendor accountable to the standards promised during sales – this is a big one. Reporting ranks pretty high among many L&D professionals’ pain points. The continuity between what is promised during early sales negotiations and what is delivered during technical implementation is at risk and frequently interrupted.

Failing to focus on the data aspects during the early stages of implementation – the two key points here being historical data imports and ongoing reporting needs – can lead to later gaps in your reporting. You’ll risk doing a lot of manual processing of endless worksheets. Plan ahead for future success based on past frustrations.

And last but not least – what is the ongoing level of support you can expect?

Never shy away from asking for help. Take advantage of the transition phase to establish rapport and find your “buddy” – an Account, Customer Success, or Client Relationship Manager. Ensure your buddy is still there after the Go Live milestone or the Critical Care period allotted through the contract. Who is your dedicated account manager? What’s the expected level of support? What are the typical SLAs for any support requests you might have? It would be best if you had answers to these questions before the Go Live. This sets you up for success in the long run. Sometimes vendors can suddenly seem unreachable after implementation is finished, and you’re always redirected to reading documentation, finding manual workarounds, or your emails go unanswered for days.

Expect that you might still have questions about very trivial things in the first 3 months up to a year – How do I create this session? How do I assign that path? How do I extract these reports? Make sure there’s someone there to answer these questions, help you, or at least become aware of the support model and prepare yourself. If you won’t have someone to call/email, navigate the documentation beforehand for your most complex use cases. Challenge what you see, receive, or get access to and make sure it serves your purpose. And don’t do it just for yourself or your team’s roles: do you expect Managers, Mentors, Evaluators, or other functions to access and use this technology somewhat frequently? Find out where the documentation for those roles exists and ask them to have a look and flag if anything doesn’t make sense to them or is hard to follow. Better still, involve them in the Pilot and ask them to test the new tech.

All in all, make sure you do a proper UAT – User Acceptance Testing. Account for at least 80% of your everyday workflows and workload – things that are pretty standard and should flow smoothly. If time allows, try and test out your most complex exception flows and ask the vendors for workarounds (if there’s no plan to solve these through technology) or an ETA for when changes will be implemented (if you know they have these on the roadmap).

This sounds very project management-y. Do I have to do all this work?

Yes and no. “Yes,” meaning this implementation is indeed a project; it has milestones, objectives, and stakeholders who should be consulted on giving the green light to proceed. Treat it as such. “No,” meaning that you don’t have to do this yourself as an L&D professional, especially if this isn’t the kind of work you’re familiar or comfortable with. You can ask for the help of someone else in the organization, maybe someone on your team who’d like to take on this challenge, or perhaps you even have a dedicated PMO that can allocate some hours per week for this implementation. Whatever the case, you must have an assigned person acting as the Project Manager, who holds you and everyone else accountable for the deliverables. After all, your goal is to plan and implement today for an objectively better future, so you should approach any learning tech implementation with this mindset.

And don’t freak out about the level of detail or the amount of work explained here. So far, all that I’ve mentioned can realistically be done within a couple of weeks, especially if some of the components are already there. Let’s say, for example, that you’ve done some prior research throughout a couple of months, here and there, and asked for recommendations; you more or less know already that you’d want to work with this LXP or that LMS. If your workflows aren’t incredibly complex or non-standard, you could be up and running with a new tool in 2 weeks, with tests, integrations, and all – not including Procurement here, as this phase tends to take a while for most companies. But if you know that your organization’s needs are more complex or that the goals you hope to achieve with this new learning tech are long-range, then you can’t escape the PM aspect of it, and you should take it seriously.

In conclusion

This can seem like an overwhelming amount of information and detail to be aware of at the very beginning of your relationship with a new vendor. But, as Benjamin Franklin says, failing to prepare is preparing to fail.

If you don’t invest this time, energy, and attention early on, you might not solve the problems you aim to solve. You will then be in a worse position than when you started: not only did the problems not go away, but you’re handling them manually or with complex workarounds – which offer plenty of opportunity for human error, data gaps, and processes that only exist as tribal knowledge, issues that compound over time and always fall back on your lap. On top of that, you’ve also made an expensive tech investment that you now need to carry further and potentially also justify, putting your credibility on the line and making everyone else (and possibly yourself) reluctant to take such a risk again.

Take any new learning technology implementation step by step and start by working back from your goal. You’re already used to doing that with Learning Experience Design, so this is not that different.